Birds around !

The Problem

We are often talking about tracking wild animals and, we now have many adaptable products on the market with GPS and connectivity to follow some of them in the wild. But this imply capturing the animal and attaching a circuit on it. It's very complicated and often traumatic for the animals. Moreover, small animals can only wear miniature circuits... the size reduction of the device is made in spite of certain performances (autonomy, communication distances, quantity of information, need sunlight...). Maybe this approach could be different ; Instead of focusing on one animal, we could focus on every animal in one area. This could be made using technological ubiquity to map animals around the world.

Our Proposal

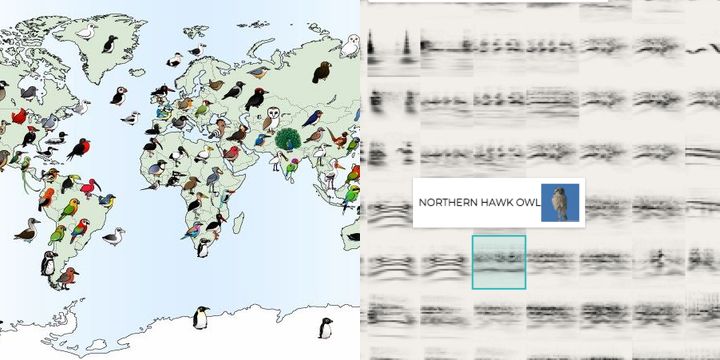

The objective is to draw a map containing the living places of all the animals in the world. Not, of course, to track every animal in real time. But just to visually understand who lives where. Does this sound impossible? "Yes, like internet 40 years ago" This is why it would be interesting to create an application capable of listening and interpreting nature. Each type of bird, for example, has its own sound. A microphone with deep learning behind could be able to recognize and extend its database to better understand which type of animals lives where... There is several app distinguishing birds with sound on internet (maybe based on the public Google bird sound database). This mean that a part of the job has already be implemented. Now the second part is to create our own app reporting the birds (or other animals) identified with the location. With enough people participating, we will be able to create precise map on where birds, but also other animals live. The good news is that you don't need much to design it. The basic system is a server to which you can send a record and compare it. Afterwards, you can access it with a smartphone application, a slightly modified Alexa amazon or a Raspberry Pi with a microphone.

We Assume that...

As any app based on collaborative datas... we are going to need a maximum of people participating !

The microphones of the phones are optimized to record human voice. We assume that the audio quality of a recording of an animal sound will be good enough with actual smartphones

Constraints to Overcome

We are going to need a great database of birds sounds to start (it will be easier to only focus on birds to start). Probably with the one from google (aiexperiments-bird-sounds) available on Github. Then we will start "learning" with new sounds. And thats going to be pretty complex to implement ^^ Another problem will be the compatibility of smartphone. As we need the microphone to listen, we will need to adapt to the characteristic of a maximum of it.

Current Work

The idea is to share open source informations to better understand the evolution of wild life. So, lets say 20,000 people using the app! ;) Right now, we started developing an app on Android (easier to start!) using Studio. The libraries to access microphone aren't to hard to use but we have to figure out how to send the files to the server to use it as comparison.

Current Needs

Buy the different apps recognising birds. An IOS developper (but android is enough for the beginning). Maybe meet some deep learning specialisst. Probably going to need to buy several phones to test the app.